Chinchilla by DeepMind - AI Tool

A GPT-3 rival by Deepmind

About Chinchilla by DeepMind

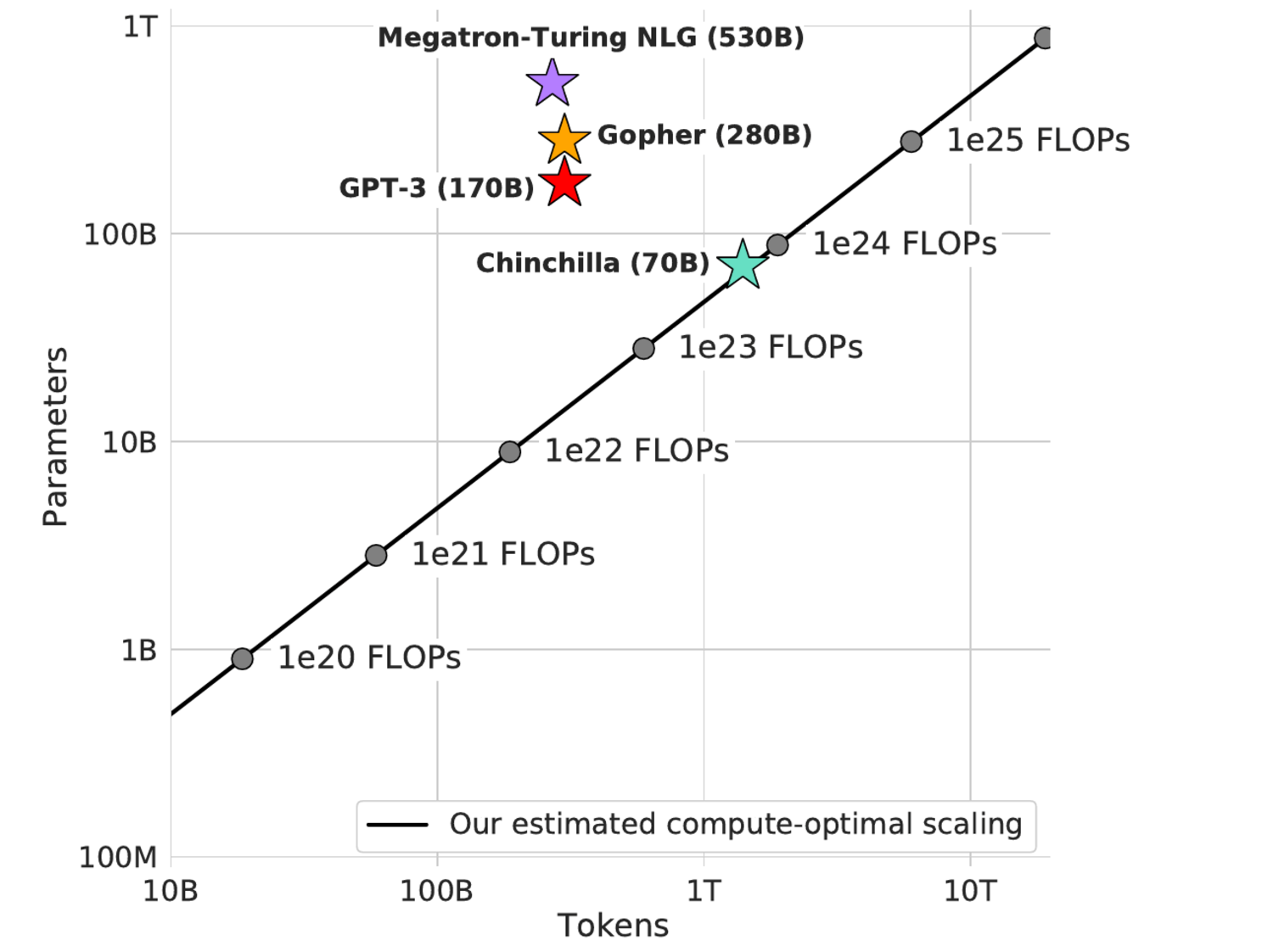

Researchers at DeepMind have proposed a new predicted compute-optimal model called Chinchilla that uses the same compute budget as Gopher but with 70 billion parameters and 4 times more data.

Chinchilla uniformly and significantly outperforms Gopher (280B), GPT-3 (175B), Jurassic-1 (178B), and Megatron-Turing NLG (530B) on a large range of downstream evaluation tasks. It uses substantially less computing for fine-tuning and inference, greatly facilitating downstream usage.

Chinchilla showed a state-of-the-art average accuracy of 67.5% on the MMLU benchmark, a 7% improvement over Gopher.

The dominant trend in large language model training has been to increase the model size, without increasing the number of training tokens. The largest dense transformer, MT-NLG 530B, is now over 3× larger than GPT-3’s 170 billion parameters.

Source: https://analyticsindiamag.com/deepmind-launches-gpt-3-rival-chinchilla/